LSTM seq2seq VAE

import sys

import warnings

if not sys.warnoptions:

warnings.simplefilter('ignore')

In [2]:

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

from sklearn.preprocessing import MinMaxScaler

from datetime import datetime

from datetime import timedelta

from tqdm import tqdm

sns.set()

tf.compat.v1.random.set_random_seed(1234)

In [3]:

df = pd.read_csv('../dataset/GOOG-year.csv')

df.head()

Out[3]:

| Date | Open | High | Low | Close | Adj Close | Volume | |

| 0 | 2016-11-02 | 778.200012 | 781.650024 | 763.450012 | 768.700012 | 768.700012 | 1872400 |

| 1 | 2016-11-03 | 767.250000 | 769.950012 | 759.030029 | 762.130005 | 762.130005 | 1943200 |

| 2 | 2016-11-04 | 750.659973 | 770.359985 | 750.560974 | 762.020020 | 762.020020 | 2134800 |

| 3 | 2016-11-07 | 774.500000 | 785.190002 | 772.549988 | 782.520020 | 782.520020 | 1585100 |

| 4 | 2016-11-08 | 783.400024 | 795.632996 | 780.190002 | 790.510010 | 790.510010 | 1350800 |

In [4]:

minmax = MinMaxScaler().fit(df.iloc[:, 4:5].astype('float32')) # Close index

df_log = minmax.transform(df.iloc[:, 4:5].astype('float32')) # Close index

df_log = pd.DataFrame(df_log)

df_log.head()

Out[4]:

| 0 | |

| 0 | 0.112708 |

| 1 | 0.090008 |

| 2 | 0.089628 |

| 3 | 0.160459 |

| 4 | 0.188066 |

Split train and test

I will cut the dataset to train and test datasets,

Train dataset derived from starting timestamp until last 30 days

Test dataset derived from last 30 days until end of the dataset

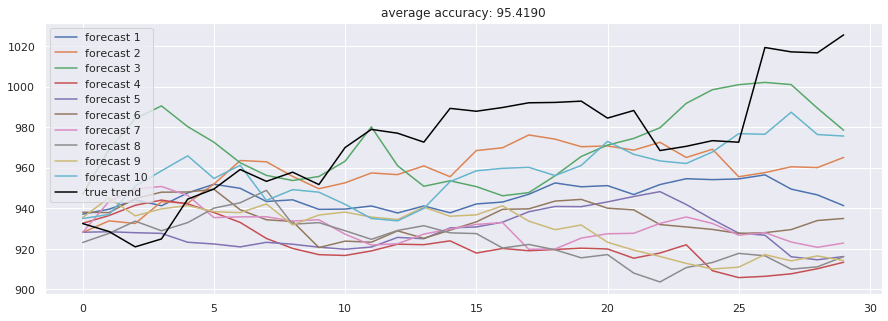

So we will let the model do forecasting based on last 30 days, and we will going to repeat the experiment for 10 times. You can increase it locally if you want, and tuning parameters will help you by a lot.

In [5]:

test_size = 30

simulation_size = 10

df_train = df_log.iloc[:-test_size]

df_test = df_log.iloc[-test_size:]

df.shape, df_train.shape, df_test.shape

Out[5]:

((252, 7), (222, 1), (30, 1))

In [6]:

class Model:

def __init__(

self,

learning_rate,

num_layers,

size,

size_layer,

output_size,

forget_bias = 0.1,

lambda_coeff = 0.5

):

def lstm_cell(size_layer):

return tf.nn.rnn_cell.LSTMCell(size_layer, state_is_tuple = False)

rnn_cells = tf.nn.rnn_cell.MultiRNNCell(

[lstm_cell(size_layer) for _ in range(num_layers)],

state_is_tuple = False,

)

self.X = tf.placeholder(tf.float32, (None, None, size))

self.Y = tf.placeholder(tf.float32, (None, output_size))

drop = tf.contrib.rnn.DropoutWrapper(

rnn_cells, output_keep_prob = forget_bias

)

self.hidden_layer = tf.placeholder(

tf.float32, (None, num_layers * 2 * size_layer)

)

_, last_state = tf.nn.dynamic_rnn(

drop, self.X, initial_state = self.hidden_layer, dtype = tf.float32

)

self.z_mean = tf.layers.dense(last_state, size)

self.z_log_sigma = tf.layers.dense(last_state, size)

epsilon = tf.random_normal(tf.shape(self.z_log_sigma))

self.z_vector = self.z_mean + tf.exp(self.z_log_sigma)

with tf.variable_scope('decoder', reuse = False):

rnn_cells_dec = tf.nn.rnn_cell.MultiRNNCell(

[lstm_cell(size_layer) for _ in range(num_layers)], state_is_tuple = False

)

drop_dec = tf.contrib.rnn.DropoutWrapper(

rnn_cells_dec, output_keep_prob = forget_bias

)

x = tf.concat([tf.expand_dims(self.z_vector, axis=0), self.X], axis = 1)

self.outputs, self.last_state = tf.nn.dynamic_rnn(

drop_dec, self.X, initial_state = last_state, dtype = tf.float32

)

self.logits = tf.layers.dense(self.outputs[-1], output_size)

self.lambda_coeff = lambda_coeff

self.kl_loss = -0.5 * tf.reduce_sum(1.0 + 2 * self.z_log_sigma - self.z_mean ** 2 -

tf.exp(2 * self.z_log_sigma), 1)

self.kl_loss = tf.scalar_mul(self.lambda_coeff, self.kl_loss)

self.cost = tf.reduce_mean(tf.square(self.Y - self.logits) + self.kl_loss)

self.optimizer = tf.train.AdamOptimizer(learning_rate).minimize(

self.cost

)

def calculate_accuracy(real, predict):

real = np.array(real) + 1

predict = np.array(predict) + 1

percentage = 1 - np.sqrt(np.mean(np.square((real - predict) / real)))

return percentage * 100

def anchor(signal, weight):

buffer = []

last = signal[0]

for i in signal:

smoothed_val = last * weight + (1 - weight) * i

buffer.append(smoothed_val)

last = smoothed_val

return buffer

In [7]:

num_layers = 1

size_layer = 128

timestamp = 5

epoch = 300

dropout_rate = 0.8

future_day = test_size

learning_rate = 0.01

In [8]:

def forecast():

tf.reset_default_graph()

modelnn = Model(

learning_rate, num_layers, df_log.shape[1], size_layer, df_log.shape[1], dropout_rate

)

sess = tf.InteractiveSession()

sess.run(tf.global_variables_initializer())

date_ori = pd.to_datetime(df.iloc[:, 0]).tolist()

pbar = tqdm(range(epoch), desc = 'train loop')

for i in pbar:

init_value = np.zeros((1, num_layers * 2 * size_layer))

total_loss, total_acc = [], []

for k in range(0, df_train.shape[0] - 1, timestamp):

index = min(k + timestamp, df_train.shape[0] - 1)

batch_x = np.expand_dims(

df_train.iloc[k : index, :].values, axis = 0

)

batch_x = np.random.binomial(1, 0.5, batch_x.shape) * batch_x

batch_y = df_train.iloc[k + 1 : index + 1, :].values

logits, last_state, _, loss = sess.run(

[modelnn.logits, modelnn.last_state, modelnn.optimizer, modelnn.cost],

feed_dict = {

modelnn.X: batch_x,

modelnn.Y: batch_y,

modelnn.hidden_layer: init_value,

},

)

init_value = last_state

total_loss.append(loss)

total_acc.append(calculate_accuracy(batch_y[:, 0], logits[:, 0]))

pbar.set_postfix(cost = np.mean(total_loss), acc = np.mean(total_acc))

future_day = test_size

output_predict = np.zeros((df_train.shape[0] + future_day, df_train.shape[1]))

output_predict[0] = df_train.iloc[0]

upper_b = (df_train.shape[0] // timestamp) * timestamp

init_value = np.zeros((1, num_layers * 2 * size_layer))

for k in range(0, (df_train.shape[0] // timestamp) * timestamp, timestamp):

out_logits, last_state = sess.run(

[modelnn.logits, modelnn.last_state],

feed_dict = {

modelnn.X: np.expand_dims(

df_train.iloc[k : k + timestamp], axis = 0

),

modelnn.hidden_layer: init_value,

},

)

init_value = last_state

output_predict[k + 1 : k + timestamp + 1] = out_logits

if upper_b != df_train.shape[0]:

out_logits, last_state = sess.run(

[modelnn.logits, modelnn.last_state],

feed_dict = {

modelnn.X: np.expand_dims(df_train.iloc[upper_b:], axis = 0),

modelnn.hidden_layer: init_value,

},

)

output_predict[upper_b + 1 : df_train.shape[0] + 1] = out_logits

future_day -= 1

date_ori.append(date_ori[-1] + timedelta(days = 1))

init_value = last_state

for i in range(future_day):

o = output_predict[-future_day - timestamp + i:-future_day + i]

out_logits, last_state = sess.run(

[modelnn.logits, modelnn.last_state],

feed_dict = {

modelnn.X: np.expand_dims(o, axis = 0),

modelnn.hidden_layer: init_value,

},

)

init_value = last_state

output_predict[-future_day + i] = out_logits[-1]

date_ori.append(date_ori[-1] + timedelta(days = 1))

output_predict = minmax.inverse_transform(output_predict)

deep_future = anchor(output_predict[:, 0], 0.3)

return deep_future[-test_size:]

In [9]:

results = []

for i in range(simulation_size):

print('simulation %d'%(i + 1))

results.append(forecast())

WARNING: Logging before flag parsing goes to stderr.

W0816 15:26:45.502804 139658996016960 deprecation.py:323] From <ipython-input-6-d907d7a4dee6>:13: LSTMCell.__init__ (from tensorflow.python.ops.rnn_cell_impl) is deprecated and will be removed in a future version.

Instructions for updating:

This class is equivalent as tf.keras.layers.LSTMCell, and will be replaced by that in Tensorflow 2.0.

W0816 15:26:45.505823 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f04dbc873c8>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

W0816 15:26:45.507445 139658996016960 deprecation.py:323] From <ipython-input-6-d907d7a4dee6>:17: MultiRNNCell.__init__ (from tensorflow.python.ops.rnn_cell_impl) is deprecated and will be removed in a future version.

Instructions for updating:

This class is equivalent as tf.keras.layers.StackedRNNCells, and will be replaced by that in Tensorflow 2.0.

simulation 1

W0816 15:26:45.829126 139658996016960 lazy_loader.py:50]

The TensorFlow contrib module will not be included in TensorFlow 2.0.

For more information, please see:

* https://github.com/tensorflow/community/blob/master/rfcs/20180907-contrib-sunset.md

* https://github.com/tensorflow/addons

* https://github.com/tensorflow/io (for I/O related ops)

If you depend on functionality not listed there, please file an issue.

W0816 15:26:45.832581 139658996016960 deprecation.py:323] From <ipython-input-6-d907d7a4dee6>:28: dynamic_rnn (from tensorflow.python.ops.rnn) is deprecated and will be removed in a future version.

Instructions for updating:

Please use `keras.layers.RNN(cell)`, which is equivalent to this API

W0816 15:26:46.024316 139658996016960 deprecation.py:506] From /usr/local/lib/python3.6/dist-packages/tensorflow/python/ops/init_ops.py:1251: calling VarianceScaling.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

W0816 15:26:46.031064 139658996016960 deprecation.py:506] From /usr/local/lib/python3.6/dist-packages/tensorflow/python/ops/rnn_cell_impl.py:961: calling Zeros.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

W0816 15:26:46.507349 139658996016960 deprecation.py:323] From <ipython-input-6-d907d7a4dee6>:31: dense (from tensorflow.python.layers.core) is deprecated and will be removed in a future version.

Instructions for updating:

Use keras.layers.dense instead.

W0816 15:26:46.696353 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f04778236d8>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

W0816 15:26:46.879564 139658996016960 deprecation.py:323] From /usr/local/lib/python3.6/dist-packages/tensorflow/python/ops/math_grad.py:1205: add_dispatch_support.<locals>.wrapper (from tensorflow.python.ops.array_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use tf.where in 2.0, which has the same broadcast rule as np.where

train loop: 100%|██████████| 300/300 [01:47<00:00, 2.80it/s, acc=97, cost=0.00235]

W0816 15:28:35.363878 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f0455d7deb8>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

W0816 15:28:35.471002 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f044b3bf198>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

simulation 2

train loop: 100%|██████████| 300/300 [01:46<00:00, 2.82it/s, acc=96.9, cost=0.00305]

W0816 15:30:22.970038 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f044b349f60>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

W0816 15:30:23.075726 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f03f03e0e10>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

simulation 3

train loop: 100%|██████████| 300/300 [01:47<00:00, 2.77it/s, acc=95.1, cost=0.00633]

W0816 15:32:11.926008 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f03f043bfd0>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

W0816 15:32:12.031505 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f03a4bb2a58>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

simulation 4

train loop: 100%|██████████| 300/300 [01:47<00:00, 2.86it/s, acc=95.9, cost=0.00422]

W0816 15:34:00.252120 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f03f0194f60>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

simulation 5

W0816 15:34:00.478516 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f044c079320>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

train loop: 100%|██████████| 300/300 [01:48<00:00, 2.75it/s, acc=96.3, cost=0.00351]

W0816 15:35:49.588577 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f03965d9940>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

W0816 15:35:49.693055 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f0393f722e8>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

simulation 6

train loop: 100%|██████████| 300/300 [01:47<00:00, 2.79it/s, acc=96.2, cost=0.00384]

W0816 15:37:38.517486 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f0393f489e8>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

W0816 15:37:38.625684 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f039189c940>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

simulation 7

train loop: 100%|██████████| 300/300 [01:47<00:00, 2.79it/s, acc=95.6, cost=0.00472]

W0816 15:39:27.256033 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f0391929fd0>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

W0816 15:39:27.363451 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f038f1f4d68>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

simulation 8

train loop: 100%|██████████| 300/300 [01:47<00:00, 2.78it/s, acc=96.1, cost=0.00394]

W0816 15:41:15.619689 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f038f286940>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

W0816 15:41:15.724680 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f038cb1fda0>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

simulation 9

train loop: 100%|██████████| 300/300 [01:47<00:00, 2.82it/s, acc=97.3, cost=0.00223]

W0816 15:43:04.145420 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f038d74f630>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

W0816 15:43:04.251741 139658996016960 rnn_cell_impl.py:893] <tensorflow.python.ops.rnn_cell_impl.LSTMCell object at 0x7f0388c03d68>: Using a concatenated state is slower and will soon be deprecated. Use state_is_tuple=True.

simulation 10

train loop: 100%|██████████| 300/300 [01:45<00:00, 2.82it/s, acc=96.6, cost=0.00292]

In [10]:

accuracies = [calculate_accuracy(df['Close'].iloc[-test_size:].values, r) for r in results]

plt.figure(figsize = (15, 5))

for no, r in enumerate(results):

plt.plot(r, label = 'forecast %d'%(no + 1))

plt.plot(df['Close'].iloc[-test_size:].values, label = 'true trend', c = 'black')

plt.legend()

plt.title('average accuracy: %.4f'%(np.mean(accuracies)))

plt.show()